You've read the title, and I'm sincerely hoping you didn't miss the developers, developers, developers reference. We're bringing the same energy to Ship AI with this blog.

We're going to start with what we think are the most important releases and wrap it up with our two cents on the future of AI transformation and how you can start shipping.

Loading video player...

AI SDK 6 (beta)

For a quick preface, if you don't know what the AI SDK is, it's a unified way of calling near enough every AI model without needing to change the way the code is written. It's also a unified language, so if you know how to write TypeScript, you know how to create a chatbot. We'll be covering this in-depth soon, but for the time being, this is dedicated purely to the latest release.

Release notes

this release is not expected to have major breaking changes Don't worry, you can breathe easily until AI SDK 7.

If you're looking for human approval, reliable structured outputs, and more granular control over complex multi-stepped tasks, this beta is definitely worth upgrading to. If you’re happy with v5, moving to v6 should be straightforward when you’re ready or when it's stable towards the end of 2025.

Or... to hell with it... Push it to prod, infosec haven't had a code red in a couple of days.

Loops are smarter

As AI gets smarter, they use apps for you, think about the results, and keep going until they're done. However, this can get expensive as hell as the context grows and the tools get more vast. ToolLoopAgent helps. You can set the number of loops the agent will go through; it defaults to 20, so you don't go broke.

Human-in-the-loop approvals

This helper asks permission before risky actions. Example: payments only run if you approve via needsApproval. Super helpful for dangerous updates or steps with money involved.

Keep in the loop

Subscribe at robotostudio.com

Structured outputs (and streaming)

Force answers into a fixed shape (like a form): Output.object, Output.array, Output.choice, Output.text. Show partial results live with partialOutputStream.

Reranking for better search

After you find results, rerank reorders them so the most relevant show up first. Control the result count with topN. It's a better way of tweaking and tuning search results.

Easy upgrade from v5

If you used v5, most code still works on v6. It's a nice, simple bump.

Key takeaways

Whilst most features of Next.js are self-explanatory because we've pretty much nailed down the usual expectations out of "what a website is", AI is the complete opposite.

It's the wild west and people have only really cared about it for 2 years... No AI didn't exist prior to 2 years ago, I don't believe you, ChatGPT invented it.

Workflows

Workflows are our personal favourite from the conference. They're a way of taking a traditional set of processes and organizing them one after another. It's a difficult concept to understand at first, but the more you think about it, the more powerful it is.

Let's start with what you expect out of an AI agent's functionality. It is no longer limited to a simple chat response. They have to:

- Call tools

- Fetch data

- Wait for humans

- Retry on failure

- Pause for a week

- Wake back up like nothing happened

Basically, everything Neo failed at.

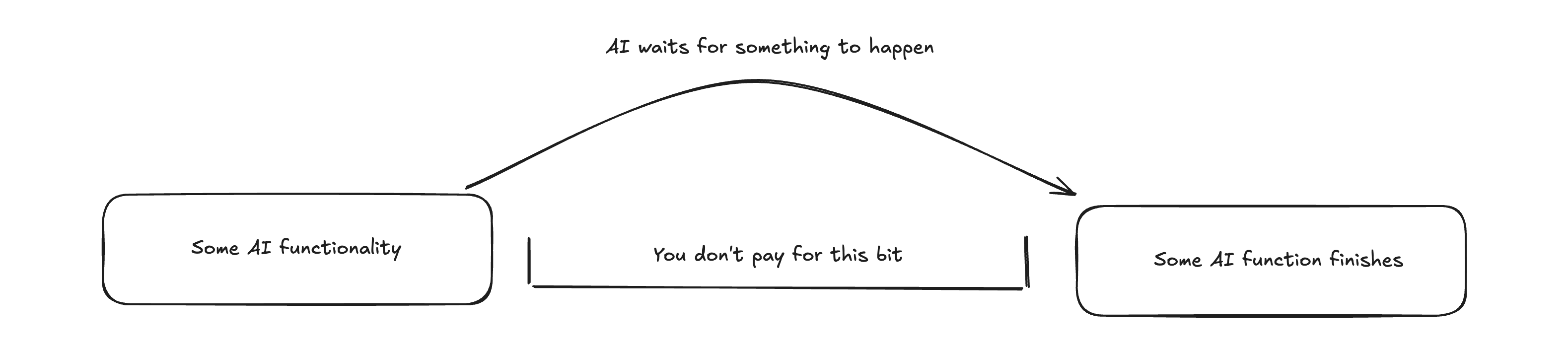

This is where every dev team quietly cries, because building that has always meant a spaghetti bowl of queues, cron jobs, retries, broken state, and a prayer you don’t lose tokens mid-execution. So Vercel introduced the** **Workflow Dev Kit, enabling developers to build long-running, reliable AI agents without manually wiring tons of infrastructure.

You write one TypeScript function, mark steps inside it, and Vercel turns it into a durable workflow. This allows agents to:

- Call models and tools

- Respond to webhooks or events

- Wait for human approval

- Resume exactly where they left off

- You can literally

await sleep("365d")see example below

I honestly implore you to go read through it and try and get your head around the mental model, because this is going to shape the way you use AI in the future. Or you can just let us build it for you and pretend you built it.

AI gateway

This isn't really a AI ship release per-say, but to be frank, you should be using this already. It's a one-stop-shop to use all the different AI models. It also means that you have a unified way of interacting with them and a universal way of paying for tokens.

The alternative is signing up for every single model provider and creating like 5 different API keys.

Now you can stop duct-taping your backend together with ChatGPT responses.

More reasons to use it

- Unified billing

- Better rate limits

- More intelligent routing

- Failover when your favorite model has a meltdown

- Observability

- No infra changes when somebody releases their new weekly model

Probably should have just lead with that.

Fluid and active CPU pricing

I'm not even going to start to pretend I know how this works, but I can tell you the effect it has. It's going to cost you less to run AI functionality.

This is the official statement from some boffin from Vercel

Fluid intelligently orchestrates compute across invocations. Multiple concurrent requests can share the same underlying resources. Teams saw up to 85% cost savings through optimizations like in-function concurrency. It means your paying less for the compute you don't actually use

For those of you that don't understand unless you have a Marvel reference, like a well adjusted adult.

Imagine the Avengers are facing a massive threat, and instead of each hero fighting with their own separate gear, they have access to a shared arsenal of super gadgets. Iron Man’s suits, Thor’s hammers, and Black Widow’s tech are all pooled together, allowing each hero to grab what they need when they need it. This way, they don’t need to carry everything themselves, and they can respond to threats more efficiently. In the tech universe, "Fluid" acts like Nick Fury, orchestrating the use of computer power. When multiple tasks come in, it ensures they can share the same resources, just like the Avengers sharing their gear. This teamwork saves a lot of resources, like saving the world without needing extra gadgets for every hero.

No spam, only good stuff

Subscribe, for more hot takes

Vercel Sandboxes

Vercel Sandbox is an ephemeral compute primitive No, I didn't understand either. Let's try again

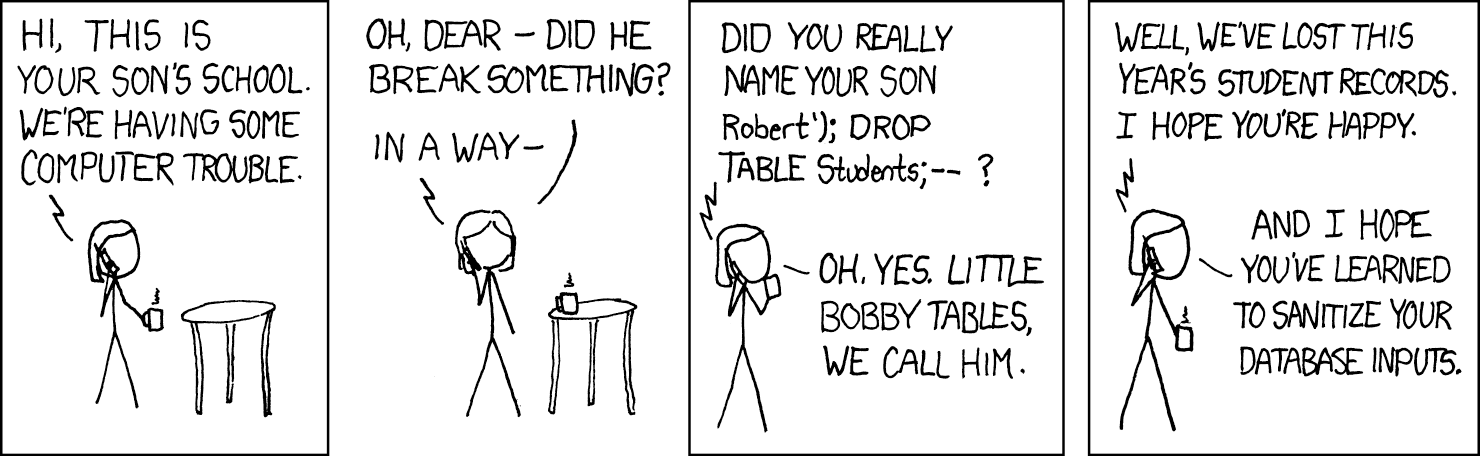

Vercel sandbox is a way to run potentially unsafe or user generated code without this happening.

Exploits of a Mom https://xkcd.com/327/

Exploits of a Mom https://xkcd.com/327/

LLM models can generate code, and the codes are used to deploy programs. But sometimes it can break catastrophically. The Sandbox is created to solve it, and here is how:

- Every execution runs inside an isolated micro-VM

- No access to prod environments

- No “oops we just deleted our own database” moment

- Perfect for validating agent-generated fixes before a human approves them

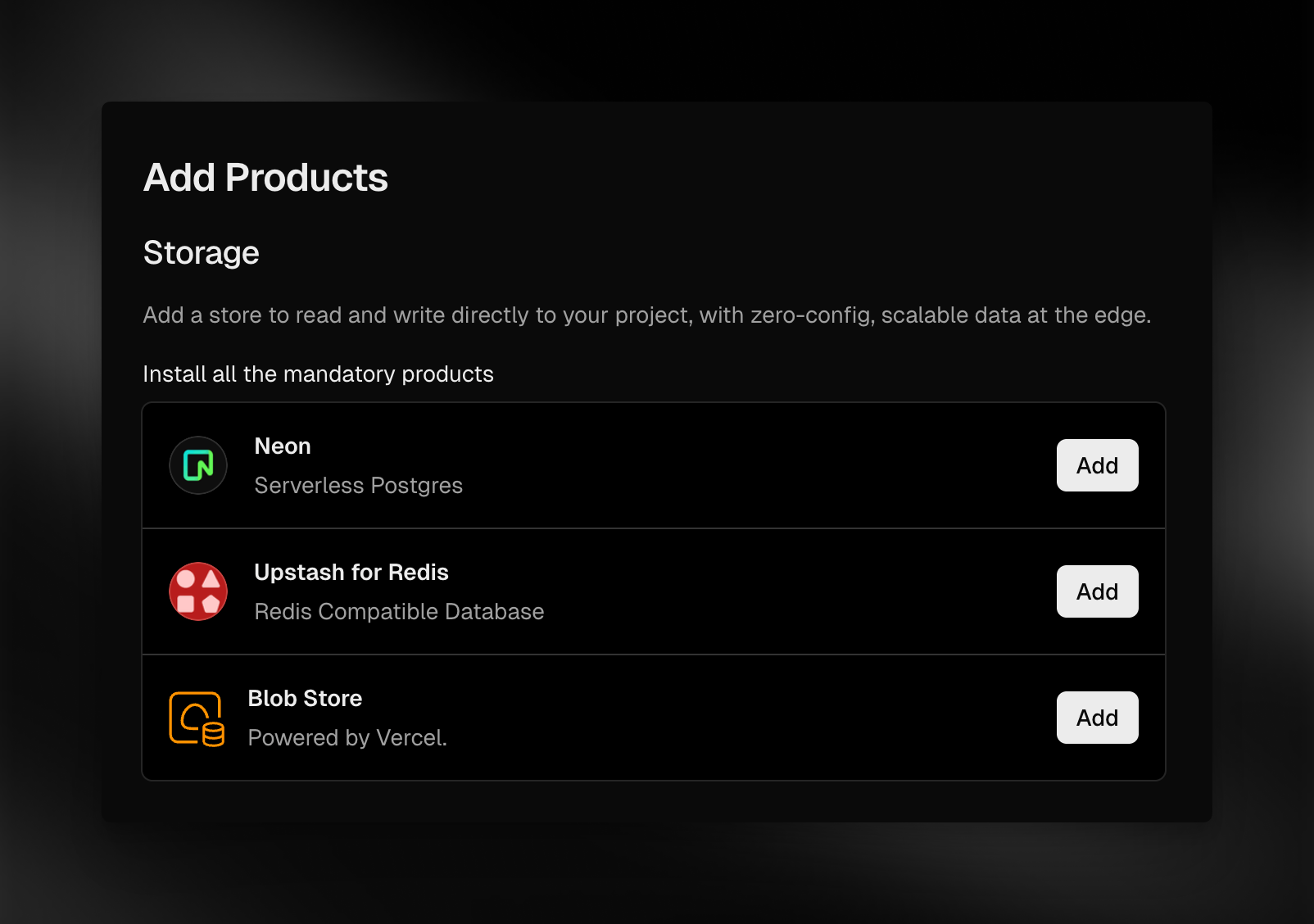

Marketplace of Agents & AI tools

Vercel "just works" with most apps out of the box. In short, you choose the tools you need, you hit the "add" button, and rather than going off to manually configure databases and blog storage, it's all done for you. It means your focus can be purely on shipping features instead of micro-managing infrastructure.

If you don't know what I mean, go deploy the Next.js AI Chatbot. It uses the AI gateway, Neon database, Vercel blob storage, Upstash and a bunch more to config with .env variables.

You can do it all in one go by pressing a couple of buttons and setup the entire infra to create a chatbot. If you've ever tried doing this manually, it's roughly saving a couple of hours of your time and at least one annoying bug fix when you forget to add an .env variable.

AI Agents for enterprises

Building AI for SMEs is one thing, but for enterprise, you're entering a whole new world of pain. We're going to help you fast-track getting your first agent out, and cover the topics that infosec will want to have a word with you about.

Observability

We've mentioned this briefly before, but observability is going to be your lifeblood for debugging niche issues with your agent. You can step through prompts literally message by message with Vercel and see what tools did or didn't fire. It's also a great way of understanding failover with models like Gemini and Claude, which have been notorious for choking.

BotID

Now this especially applies if you're running the agent publically. BotID is going to save you from those 5 figure bills if somebody decides to try and hit your agent with a brute force prompt.

If you haven't seen it before it's basically Google's reCAPTCHA, but doesn't suck and doesn't put a label at the bottom right of your screen. It's invisible, and has one of the most comprehensive and impressive Bot detection databases. It also has active AI in there somewhere, so it can do some crazy stuff like this.

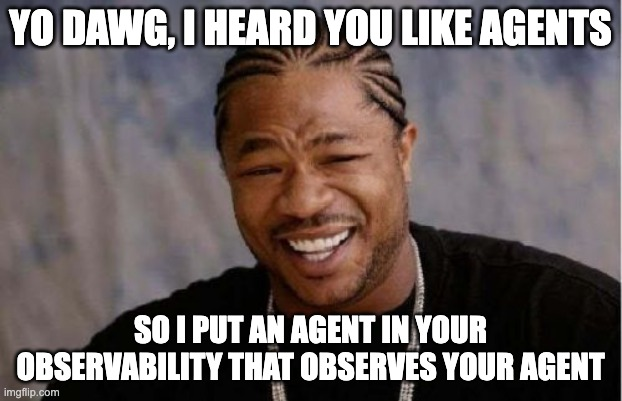

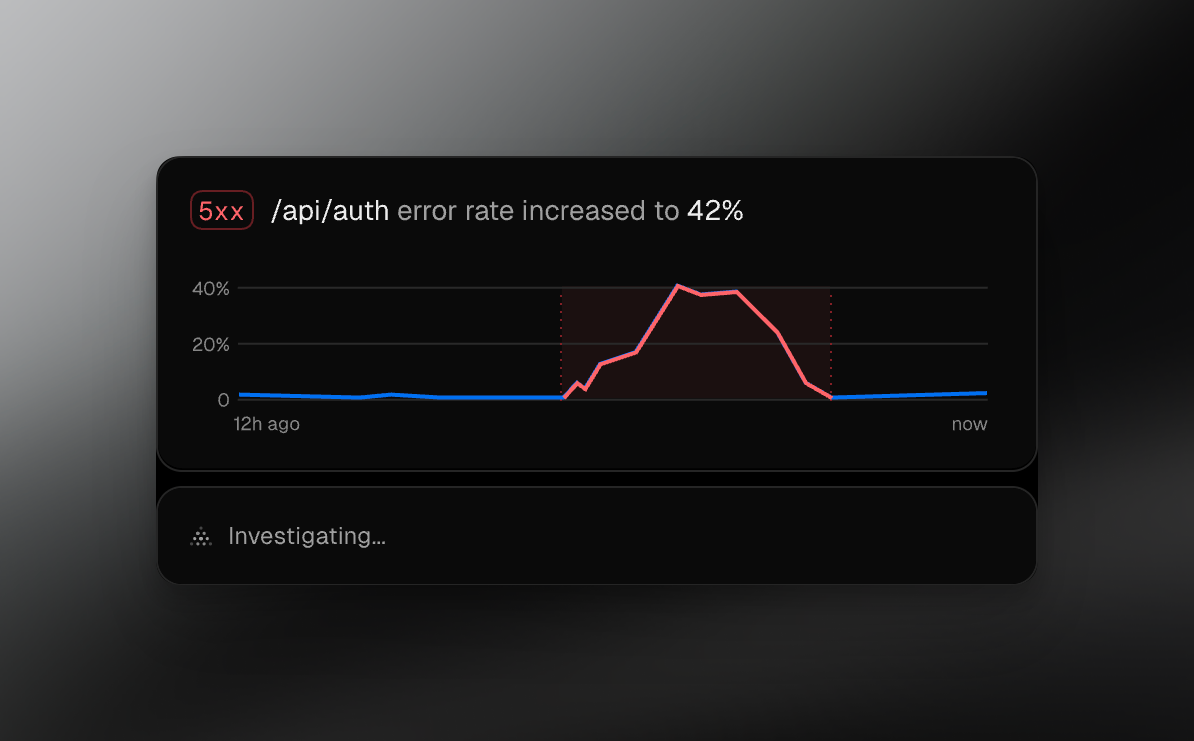

Agents in observability

Nothing like a 2007 meme to really set-off an enterprise section, but it's actually an accurate portrayal of what agents in observability does.

It's effectively an agent that helps you make sense of what the errors are telling you. It's incredibly helpful because 5XX errors don't really help you debug a problem.

However, suppose the agent investigates and tells you that there was an auth failure from the **vendor's side. **In that case, you can save all the time investigating it and instead bring it up as leverage the next time you're negotiating enterprise contracts.

Here's an example below.

What to build

With all these gems we've dropped so far about how to keep infosec happy and procurement up to date with bargaining points, you surely don't expect us to spoon-feed you an idea of what to build?

Well, lucky for both of us, we don't need to tell you, Vercel did it for us. I'll just let Malte take it away with some OSS tools you can start your agent builds with.

OSS lead agent

It's a way of enriching your sales flow to stop you from copy pasting manual information about inbound leads. It can be tweaked exactly the way you quantify leads, e.g, research most recent funding rounds, research where the business is located, whether they have any outstanding lawsuits, you name it, you can tweak it.

OSS data analyst agent

This is an agent that can sit on top of your data warehouse and effectively create SQL queries to find deep insights about it.

Speaking of deep dives on analytics data, this is also something PostHog does a great job with and is a prime example of what this agent could help with.

Let us build you something

We've already been implementing a ton of agents for our clients, and we'd love to add you to the list. We've done everything from data enrichment, AI overviews of performance optimisation, and even SEO/AIO workflow audits. Give us a shout.

Wrap it up

Loading video player...

If you haven't guessed already, the AI Cloud is designed to remove infrastructure from the equation, allowing teams to ship faster. We're great believers of this as a business, and it's been wildly successful for us when it comes to building websites. Why not do the same for AI?

We'll leave you with this handy little list of what everything does if you need to refer back in the future

- AI Gateway: With this, you can pick any LLM model and switch it anytime you want

- Fluid Compute: Only pay when CPU is actually used

- Sandboxes: Run unsafe code safely

- Workflows: Durable and resumable implementations

- Observability: See every step, every token, and every failure

- **Zero config: **Deploy and forget

- **BotID: **better reCAPTCHA

With all this amazing tooling, there's never been a better time to build an agent. We'd love to hear about what you're working on, and if you have any questions, we're more than happy to answer them.

You can bet your AaaS we're building something too (sorry)